Deep Network Interpolation [Paper] [Project Page]

By Xintao Wang, Ke Yu, Chao Dong, Xiaoou Tang, Chen Change Loy

@Article{wang2018dni,

author={Wang, Xintao and Yu, Ke and Dong, Chao and Tang, Xiaoou and Loy, Chen Change},

title={Deep network interpolation for continuous imagery effect transition},

journal={arXiv preprint arXiv:1811.10515},

year={2018}

}

The following is a YouTube video showing several DNI examples. See our paper or the project page for more applications.

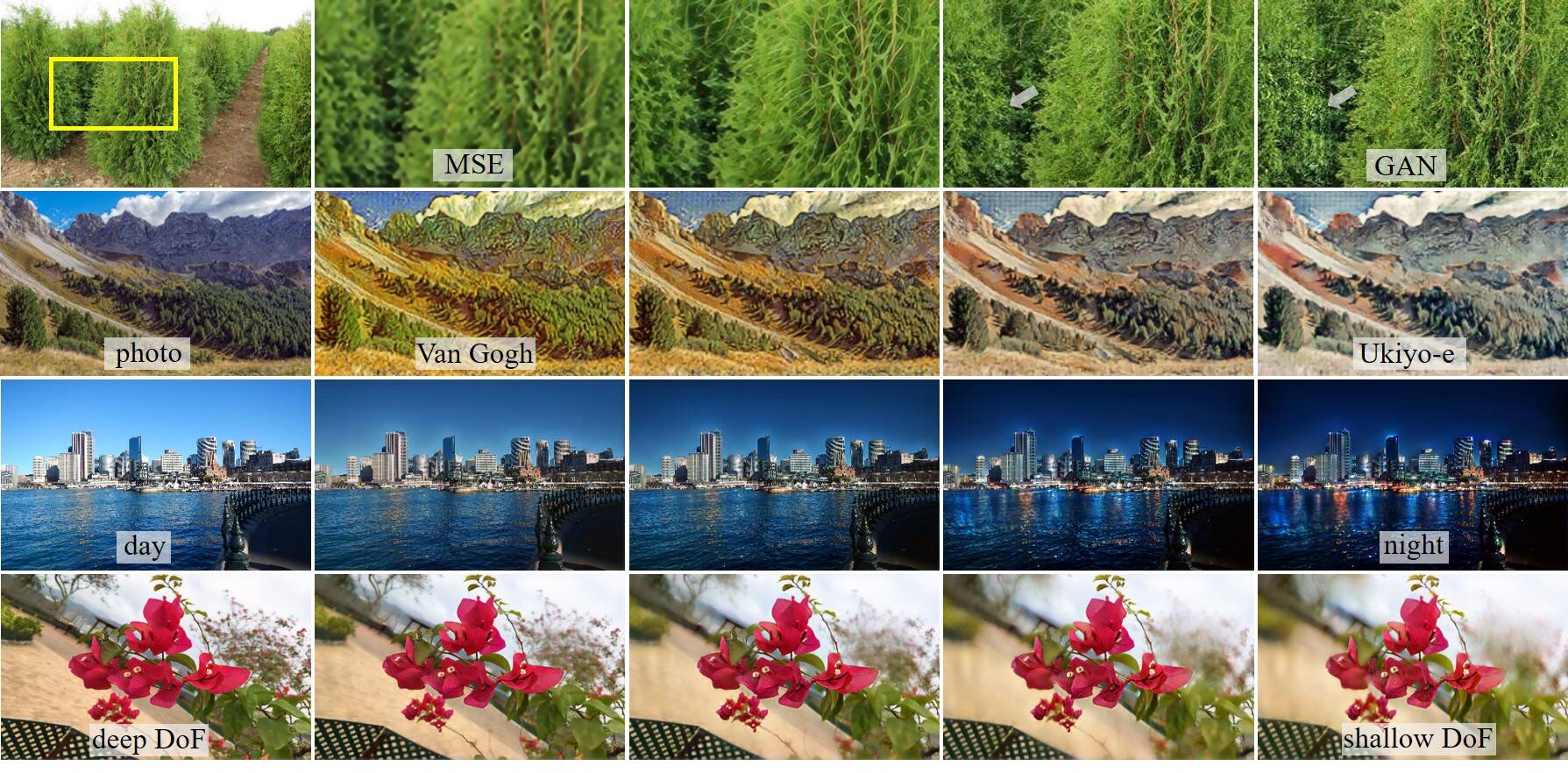

- We propose a simple yet universal approach - deep network interpolation, for smooth and continuous imagery effect transition without further training.

- Different from previous works operating in the feature space, we make an attempt to investigate the manipulation in the parameter space of neural networks.

- Our analyses show that learned filters for several related tasks exhibit continuous changes. We believe that it is worth exploiting the underlying correlations of learned filters to further extend the ability and practicality of existing models.

DNI is simple and can be implemented with several lines of codes.

alpha = 0.3 # interpolation coefficient

net_A = torch.load('path_to_net_A.pth')

net_B = torch.load('path_to_net_B.pth')

net_interp = OrderedDict()

for k, v_A in net_A.items():

v_B = net_B[k]

net_interp[k] = alpha * v_A + (1 - alpha) * v_B