-

-

Notifications

You must be signed in to change notification settings - Fork 16.2k

Docker Quickstart

This tutorial will guide you through the process of setting up and running YOLOv5 in a Docker container.

You can also explore other quickstart options for YOLOv5, such as our Colab Notebook

- Nvidia Driver: Version 455.23 or higher. Download from Nvidia's website.

- Nvidia-Docker: Allows Docker to interact with your local GPU. Installation instructions are available on the Nvidia-Docker GitHub repository.

- Docker Engine - CE: Version 19.03 or higher. Download and installation instructions can be found on the Docker website.

The Ultralytics YOLOv5 DockerHub repository is available at https://hub.docker.com/r/ultralytics/yolov5. Docker Autobuild ensures that the ultralytics/yolov5:latest image is always in sync with the most recent repository commit. To pull the latest image, run the following command:

sudo docker pull ultralytics/yolov5:latestRun an interactive instance of the YOLOv5 Docker image (called a "container") using the -it flag:

sudo docker run --ipc=host -it ultralytics/yolov5:latestTo run a container with access to local files (e.g., COCO training data in /datasets), use the -v flag:

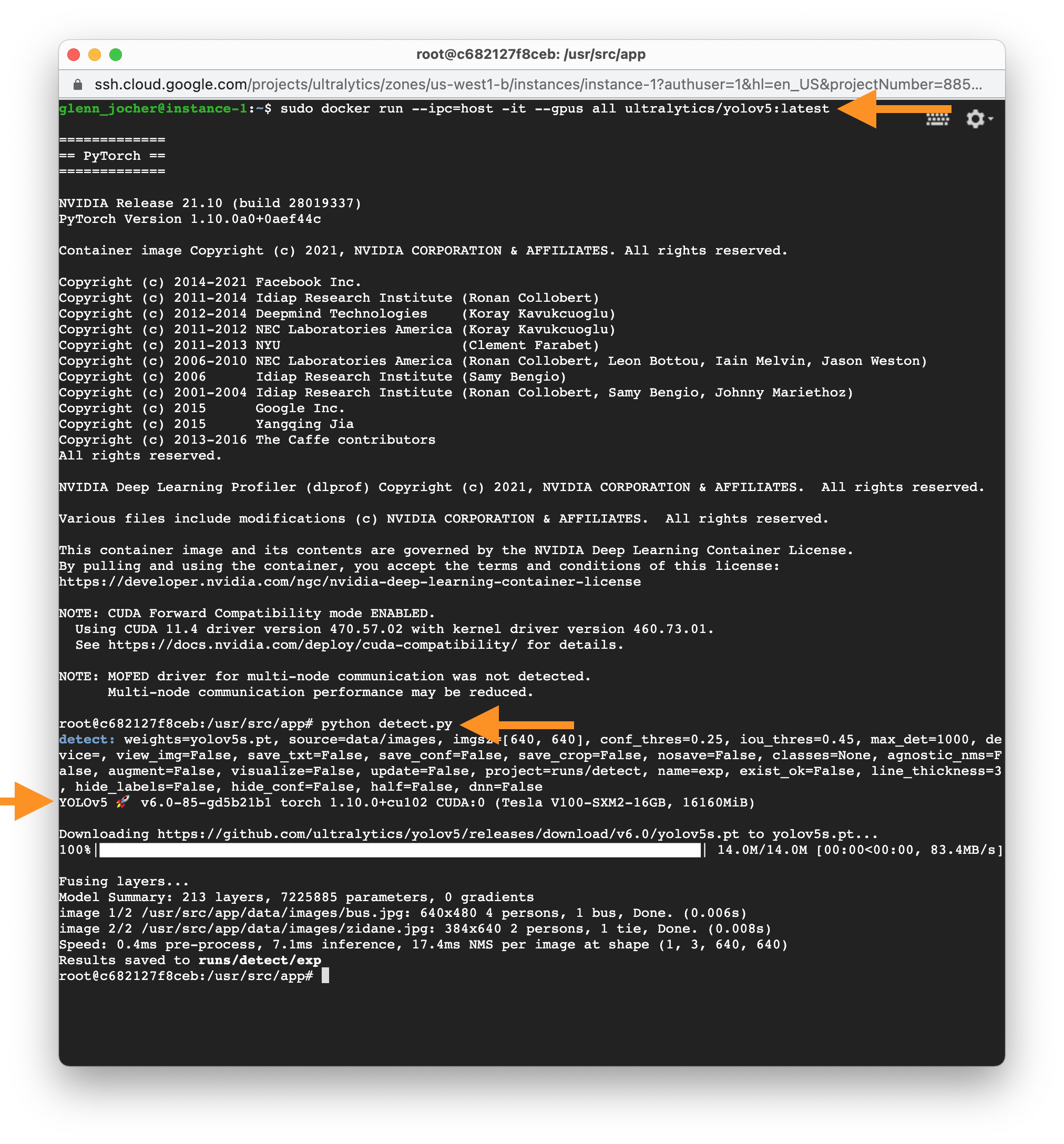

sudo docker run --ipc=host -it -v "$(pwd)"/datasets:/usr/src/datasets ultralytics/yolov5:latestTo run a container with GPU access, use the --gpus all flag:

sudo docker run --ipc=host -it --gpus all ultralytics/yolov5:latestNow you can train, test, detect, and export YOLOv5 models within the running Docker container:

python train.py # train a model

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

© 2024 Ultralytics Inc. All rights reserved.

https://ultralytics.com