-

-

Notifications

You must be signed in to change notification settings - Fork 15.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

How can I convert .pt weight to tflite? #4586

Comments

|

@jaskiratsingh2000 see tf.py: Lines 1 to 12 in bbfafea

|

|

@glenn-jocher Hey, Thanks for referring me to this issue. I appreciate that. Can you also let me know that instead of "yolov5s.pt" weights can I add custom trained weights file as well like "best.pt" or "last.pt" @glenn-jocher another quick question that will this get exported or what? Where can I find after that? |

|

@jaskiratsingh2000 you can export any YOLOv5 model, that's the main purpose of the function. It wouldn't be much use if it only exported official models. Exported models are placed in same parent directory as source model. |

|

But I couldn't find a way to export

…On Sun, 29 Aug 2021, 5:40 pm Glenn Jocher, ***@***.***> wrote:

@jaskiratsingh2000 <https://github.com/jaskiratsingh2000> you can export

any YOLOv5 model, that's the main purpose of the function. It wouldn't be

much use if it only exported official models.

Exported models are placed in same parent directory as source model.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#4586 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/ACOBHK6O5ZRGGXK47ZM3X63T7IPR7ANCNFSM5DADDT4Q>

.

|

|

Following the usage indications: I got: However, when running the same script as: the conversion is run correctly. This may be a typo in the documentation or an code issue... Hope this feedback helps! Thanks for the amazing work! |

|

@JNaranjo-Alcazar thanks for the bug report! I'm not able to reproduce this, when I run the usage example in Colab everything works well: # Setup

!git clone https://github.com/ultralytics/yolov5 # clone repo

%cd yolov5

%pip install -qr requirements.txt # install dependencies

import torch

from IPython.display import Image, clear_output # to display images

clear_output()

print(f"Setup complete. Using torch {torch.__version__} ({torch.cuda.get_device_properties(0).name if torch.cuda.is_available() else 'CPU'})")

# Reproduce

!python models/tf.py --weights yolov5s.pt --cfg yolov5s.yaml |

|

I had this bug using a Docker container (not the one available in the repository) with python 3.7 and installing all the requirements and tensorflow. I think I should use Colab then. Thanks for the quick reply |

|

@glenn-jocher |

|

@Ronald-Kray TF exports are handled by export.py now: |

|

👋 Hello, this issue has been automatically marked as stale because it has not had recent activity. Please note it will be closed if no further activity occurs. Access additional YOLOv5 🚀 resources:

Access additional Ultralytics ⚡ resources:

Feel free to inform us of any other issues you discover or feature requests that come to mind in the future. Pull Requests (PRs) are also always welcomed! Thank you for your contributions to YOLOv5 🚀 and Vision AI ⭐! |

|

PYTHONPATH=. python3 models/tf.py --weights weights/yolov5s.pt --cfg models/yolov5s.yaml --img 320 --tfl-int8 --source /data/dataset/coco/coco2017/train2017 --ncalib 100 I am trying to convert my trained model to tflite. Please guide about weights. Will I be using my model trained best.pt ?? and what is to be added in source? |

|

Also I am getting illegal instruction as the error |

|

|

Do we need to give weights of our trained custom model best.pt and what

should be the --source? Should it be training dataset of images??

I tried but I am getting error permission denied!!

Please advise.

Regards

Kashish Goyal

…On Wed, 17 Nov, 2021, 6:33 pm Glenn Jocher, ***@***.***> wrote:

@kashishgoyal31 <https://github.com/kashishgoyal31>

python export.py --weights yolov5s.pt --include tflite

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#4586 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AVHEQRLH3LCWEXQDZK4XATDUMORY7ANCNFSM5DADDT4Q>

.

Triage notifications on the go with GitHub Mobile for iOS

<https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

or Android

<https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

|

|

@kashishgoyal31 --source can be anything you want. See detect.py for Usage examples instead of asking: Lines 5 to 12 in 562191f

|

|

I tried but I am getting error permission denied.

…On Wed, 17 Nov, 2021, 9:10 pm Glenn Jocher, ***@***.***> wrote:

@kashishgoyal31 <https://github.com/kashishgoyal31> --source can be

anything you want. See detect.py for Usage examples instead of asking:

https://github.com/ultralytics/yolov5/blob/562191f5756273aca54225903f5933f7683daade/detect.py#L5-L12

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#4586 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AVHEQRO5E52JNWU4IDJEILLUMPEFFANCNFSM5DADDT4Q>

.

Triage notifications on the go with GitHub Mobile for iOS

<https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

or Android

<https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

|

|

@kashishgoyal31 👋 hi, thanks for letting us know about this possible problem with YOLOv5 🚀. We've created a few short guidelines below to help users provide what we need in order to get started investigating a possible problem. How to create a Minimal, Reproducible ExampleWhen asking a question, people will be better able to provide help if you provide code that they can easily understand and use to reproduce the problem. This is referred to by community members as creating a minimum reproducible example. Your code that reproduces the problem should be:

For Ultralytics to provide assistance your code should also be:

If you believe your problem meets all the above criteria, please close this issue and raise a new one using the 🐛 Bug Report template with a minimum reproducible example to help us better understand and diagnose your problem. Thank you! 😃 |

|

I have converted the weights from best.pt to tflite using below command The output image does not consider my class details in output and gives class 0 instead of my custom class name |

|

@kashishgoyal31 class names are not in tflite files. You can manually add them here: Line 80 in 7a39803

|

|

The weights work fine for me with best.pt and when I use detect.py I get

the required results. But after converting weights there is problem in

class information and in Android app does not work fine. Please tell me the

correct way to get through.

…On Tue, 23 Nov, 2021, 7:22 pm Glenn Jocher, ***@***.***> wrote:

@kashishgoyal31 <https://github.com/kashishgoyal31> class names are not

in tflite files. You can manually add them here:

https://github.com/ultralytics/yolov5/blob/7a39803476f8ae55fb25ed93a400a3bba998d5e7/detect.py#L80

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#4586 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AVHEQROJL6H2IS4G3XXOJUTUNOMDHANCNFSM5DADDT4Q>

.

Triage notifications on the go with GitHub Mobile for iOS

<https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

or Android

<https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

|

|

When I am running detect.py it's predicting correct but it is not taking

class labels instead taking default values class 0, 1, 2.

On Wed, 24 Nov, 2021, 11:11 am KASHISH GOYAL, ***@***.***>

wrote:

… The weights work fine for me with best.pt and when I use detect.py I get

the required results. But after converting weights there is problem in

class information and in Android app does not work fine. Please tell me the

correct way to get through.

On Tue, 23 Nov, 2021, 7:22 pm Glenn Jocher, ***@***.***>

wrote:

> @kashishgoyal31 <https://github.com/kashishgoyal31> class names are not

> in tflite files. You can manually add them here:

>

> https://github.com/ultralytics/yolov5/blob/7a39803476f8ae55fb25ed93a400a3bba998d5e7/detect.py#L80

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

> <#4586 (comment)>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AVHEQROJL6H2IS4G3XXOJUTUNOMDHANCNFSM5DADDT4Q>

> .

> Triage notifications on the go with GitHub Mobile for iOS

> <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

> or Android

> <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

>

|

|

python3 export.py --weights /content/best.pt --img 320 --include tflite |

|

TFLite models don't have class names attached. You can pass a --data yaml during detect if you'd like to use alternative annotations names: |

|

I want to convert .pt file to .tflite. |

|

@ramchandra-bioenable TFLite image sizes are fixed, you define them at export time: |

|

!python models/tf.py --weights '/content/drive/MyDrive/best.pt' Classes are not reflected in best-fp16.tflite. Please let me know how to reflect the classes in the .pt file. I'm running on Colab. |

|

@c1p31068 tflite models don't have attached class names metadata, you can find the names manually in your data.yaml |

|

Thanks for the reply. What is the best way to manually name in data.yaml? Can they be done with googl colab? Furthermore, I am a beginner, is it possible to do this task? The sentence is wrong because I'm using a translation. |

|

Hi, can .tflite be used with yolov5? |

|

@c1p31068 to export from PyTorch to TFLite |

|

Thanks for the reply. I was able to export! However, the yolov5 labels are not reflected. I have yolov5 running and testing and in doing so it becomes a person. |

|

@c1p31068 if you're using YOLOv5 for inference you can pass your --data to specify your names, i.e. |

|

Thank you! We've solved the problem! I am so glad to have found you. Thank you so much! |

|

Hi, is it possible to specify a version of tflite to convert? |

|

@c1p31068 what do you mean a version of tflite? Whatever version of tensorflow that is installed is used during export. |

|

Thanks for the reply. |

|

@jaskiratsingh2000 Hello. Did you try to use converted/exported to tflite model weight deploy in Android Studio to do Object detection with mobile app? If yes, did you have any issues with converted/exported to tflite model ? |

|

@HripsimeS 👋 Hello! Thanks for asking about Export Formats. YOLOv5 🚀 offers export to almost all of the common export formats. See our TFLite, ONNX, CoreML, TensorRT Export Tutorial for full details. FormatsYOLOv5 inference is officially supported in 11 formats: 💡 ProTip: Export to ONNX or OpenVINO for up to 3x CPU speedup. See CPU Benchmarks.

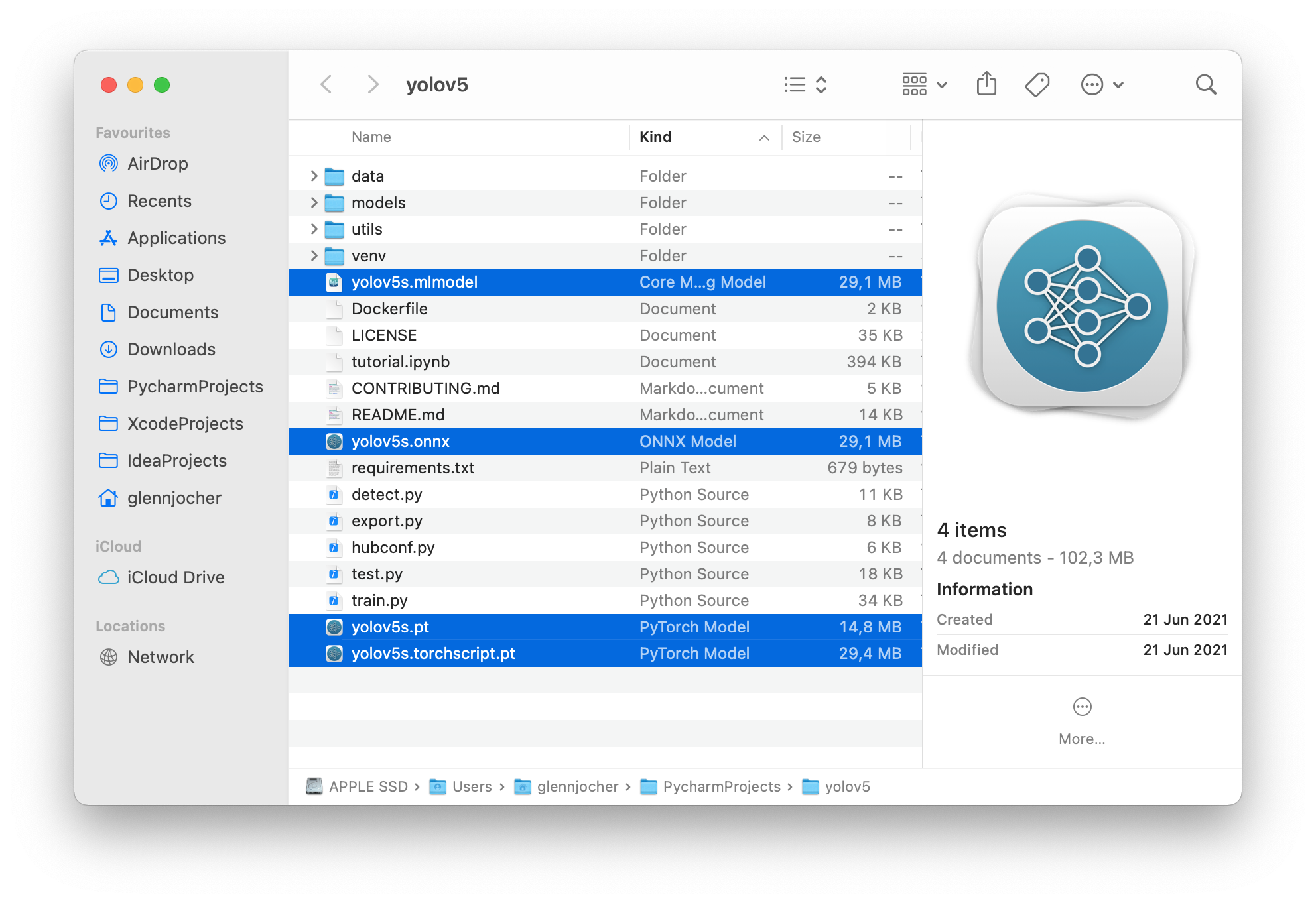

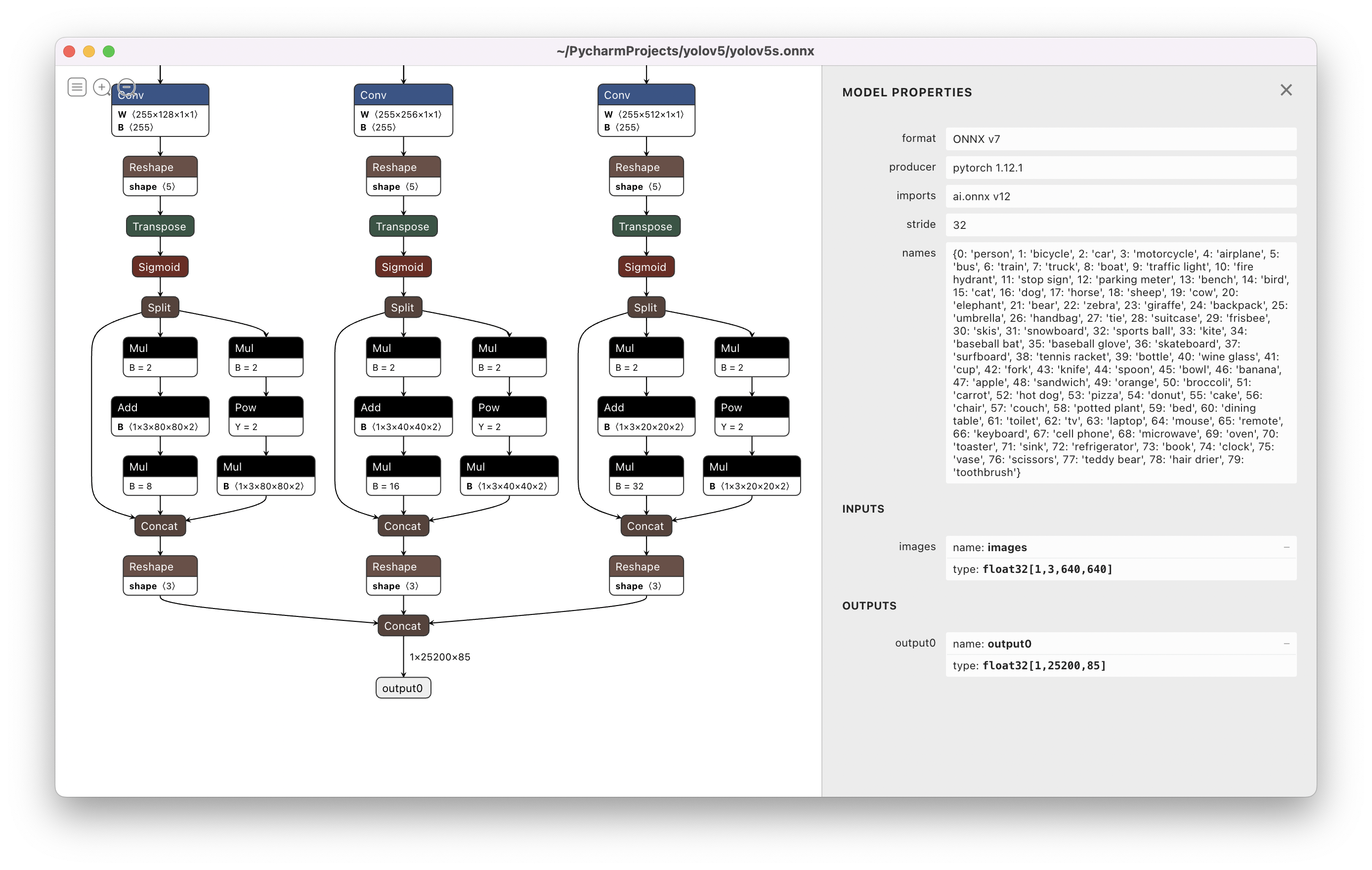

BenchmarksBenchmarks below run on a Colab Pro with the YOLOv5 tutorial notebook python benchmarks.py --weights yolov5s.pt --imgsz 640 --device 0Colab Pro V100 GPUColab Pro CPUExport a Trained YOLOv5 ModelThis command exports a pretrained YOLOv5s model to TorchScript and ONNX formats. python export.py --weights yolov5s.pt --include torchscript onnx💡 ProTip: Add Output: export: data=data/coco128.yaml, weights=['yolov5s.pt'], imgsz=[640, 640], batch_size=1, device=cpu, half=False, inplace=False, train=False, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=12, verbose=False, workspace=4, nms=False, agnostic_nms=False, topk_per_class=100, topk_all=100, iou_thres=0.45, conf_thres=0.25, include=['torchscript', 'onnx']

YOLOv5 🚀 v6.2-104-ge3e5122 Python-3.7.13 torch-1.12.1+cu113 CPU

Downloading https://github.com/ultralytics/yolov5/releases/download/v6.2/yolov5s.pt to yolov5s.pt...

100% 14.1M/14.1M [00:00<00:00, 274MB/s]

Fusing layers...

YOLOv5s summary: 213 layers, 7225885 parameters, 0 gradients

PyTorch: starting from yolov5s.pt with output shape (1, 25200, 85) (14.1 MB)

TorchScript: starting export with torch 1.12.1+cu113...

TorchScript: export success ✅ 1.7s, saved as yolov5s.torchscript (28.1 MB)

ONNX: starting export with onnx 1.12.0...

ONNX: export success ✅ 2.3s, saved as yolov5s.onnx (28.0 MB)

Export complete (5.5s)

Results saved to /content/yolov5

Detect: python detect.py --weights yolov5s.onnx

Validate: python val.py --weights yolov5s.onnx

PyTorch Hub: model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.onnx')

Visualize: https://netron.app/The 3 exported models will be saved alongside the original PyTorch model: Netron Viewer is recommended for visualizing exported models: Exported Model Usage Examples

python detect.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddle

python val.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS Only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddleUse PyTorch Hub with exported YOLOv5 models: import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.pt')

'yolov5s.torchscript ') # TorchScript

'yolov5s.onnx') # ONNX Runtime

'yolov5s_openvino_model') # OpenVINO

'yolov5s.engine') # TensorRT

'yolov5s.mlmodel') # CoreML (macOS Only)

'yolov5s_saved_model') # TensorFlow SavedModel

'yolov5s.pb') # TensorFlow GraphDef

'yolov5s.tflite') # TensorFlow Lite

'yolov5s_edgetpu.tflite') # TensorFlow Edge TPU

'yolov5s_paddle_model') # PaddlePaddle

# Images

img = 'https://ultralytics.com/images/zidane.jpg' # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.OpenCV DNN inferenceOpenCV inference with ONNX models: python export.py --weights yolov5s.pt --include onnx

python detect.py --weights yolov5s.onnx --dnn # detect

python val.py --weights yolov5s.onnx --dnn # validateC++ InferenceYOLOv5 OpenCV DNN C++ inference on exported ONNX model examples:

YOLOv5 OpenVINO C++ inference examples:

Good luck 🍀 and let us know if you have any other questions! |

|

@glenn-jocher I am trying to convert best.pt to tflite but keep encountering the error. |

|

hi, i want to convert the weights file 'best.pt' into tflite such as i modified the architecture of yolov5 model ( i replace some c3 function by a transformer ), but i got an error in export.py that c3tr is not defined. TensorFlow Lite: starting export with tensorflow 2.12.0... ext |

|

hi, i want to convert the weights file 'best.pt' into tflite such as i modified the architecture of yolov5 model ( i replace the final c3 function by a transformer ), but i got an error in export.py that c3tr is not defined. please help me iw there is a solution or tell me if it is impossible to convert it into tflite when the architecture is modified TensorFlow Lite: starting export with tensorflow 2.12.0... ext |

|

@YasmineeBa hello, it seems like the error you're encountering is related to the modified architecture that you're using. The error message states that 'C3STR' is not defined, which suggests that the modified architecture is expecting a layer or function that is not defined. Regarding your question about whether it is possible to convert a modified YOLOv5 architecture to TFLite, the answer is that it should be possible as long as the modified architecture can be defined using the available TensorFlow operations. However, it might require some additional customization and configuration to ensure that the conversion process is successful. One suggestion is to try and define the 'C3STR' layer or function in your modified architecture and see if that resolves the issue. Additionally, you could try modifying the TFLite export script to account for the changes in your architecture. If the issue persists, it might be helpful to share more details about the modified architecture and the changes that were made in order to provide more specific guidance. I hope this helps! |

|

thank you for response, i really defined all function related with C3STR function in the common.py file, and declared in yolo.py using pytorch, and i modified the yolov5s.yaml with the new architecture but when i run the script to export weights into tflite, the code show me the default architecture of yolov5s ( summary architecture). |

|

@YasmineeBa hello! It's great to hear that you've defined all the necessary functions related to 'C3STR' in the common.py file and declared them in yolo.py using PyTorch. If you're modifying the yolov5s.yaml file to incorporate these changes, you should see the modified architecture when running the training script with PyTorch. However, if you're encountering issues when exporting the model to TFLite, it's possible that some changes may need to be made in the tf.py file to ensure that the TensorFlow implementation is consistent with the changes made in the PyTorch implementation. It may be helpful to review the documentation and examples related to exporting PyTorch models to TensorFlow and TFLite to ensure that the conversion process is executed correctly. Please feel free to provide more details about the specific issues you're encountering with the TFLite export process, and we can work together to find a solution. |

|

Hi,

Thank you for your response.

I solve the problem of undefined function by defining all functions

dependent on c3str on tensorflow in the tf.py file. but i got a new error.

Can you please help me with this error?

Le dim. 30 avr. 2023 à 11:50, Glenn Jocher ***@***.***> a

écrit :

… @YasmineeBa <https://github.com/YasmineeBa> hello! It's great to hear

that you've defined all the necessary functions related to 'C3STR' in the

common.py file and declared them in yolo.py using PyTorch. If you're

modifying the yolov5s.yaml file to incorporate these changes, you should

see the modified architecture when running the training script with PyTorch.

However, if you're encountering issues when exporting the model to TFLite,

it's possible that some changes may need to be made in the tf.py file to

ensure that the TensorFlow implementation is consistent with the changes

made in the PyTorch implementation. It may be helpful to review the

documentation and examples related to exporting PyTorch models to

TensorFlow and TFLite to ensure that the conversion process is executed

correctly.

Please feel free to provide more details about the specific issues you're

encountering with the TFLite export process, and we can work together to

find a solution.

—

Reply to this email directly, view it on GitHub

<#4586 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/APK2TZMWHK3ALDZZPAKKDGTXDY7Y5ANCNFSM5DADDT4Q>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

Hi @YasmineeBa, It's good to hear that you were able to define all the necessary functions related to 'C3STR' in the common.py file and resolved the issue related to undefined functions. However, it seems like you're encountering a new error during the TFLite export process. In order to provide more specific guidance, it would be helpful to review the error message that you're encountering. Based on the error message, we can suggest possible solutions to resolve the issue. Please feel free to share more details about the error message that you're encountering, and we can work together to find a solution. In the meantime, it may be helpful to review the documentation and examples related to exporting PyTorch models to TensorFlow and TFLite to ensure that the conversion process is executed correctly. Let us know if you have further questions or concerns. Best regards. |

|

Thank you for your response.

I was able to solve this problem, it was a miss declaration of the call

function in my function defined, so when the program is compiling, it

dpesn't compile the part of c3str function, i noticed this issue so i

review all my functions declarations and finally I could solve the problem.

thank you

Le lun. 1 mai 2023 à 14:40, Glenn Jocher ***@***.***> a

écrit :

… Hi @YasmineeBa <https://github.com/YasmineeBa>,

It's good to hear that you were able to define all the necessary functions

related to 'C3STR' in the common.py file and resolved the issue related to

undefined functions. However, it seems like you're encountering a new error

during the TFLite export process.

In order to provide more specific guidance, it would be helpful to review

the error message that you're encountering. Based on the error message, we

can suggest possible solutions to resolve the issue. Please feel free to

share more details about the error message that you're encountering, and we

can work together to find a solution.

In the meantime, it may be helpful to review the documentation and

examples related to exporting PyTorch models to TensorFlow and TFLite to

ensure that the conversion process is executed correctly.

Let us know if you have further questions or concerns.

Best regards.

—

Reply to this email directly, view it on GitHub

<#4586 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/APK2TZPBGR25JHB4KBPJHL3XD64MJANCNFSM5DADDT4Q>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

Hi @YasmineeBa, Thanks for updating us and letting us know that the issue has been resolved! It's great to hear that you were able to identify and fix the problem by reviewing your function declarations. If you encounter any further issues or have any additional questions, please don't hesitate to reach out for assistance. We're always here to help. Best regards. |

|

hey i am converting .pb to tflite of yolov7 but when i add metadata using tensorflow object detection metadata writer , in output_tensor_metadata its showing only location instead of category,score,location !python export.py --weights /content/drive/MyDrive/ObjectDetection/yolov7/runs/train/yolov7-custom/weights/best.pt --grid --end2end --simplify !onnx-tf convert -i /content/drive/MyDrive/ObjectDetection/yolov7/runs/train/yolov7-custom/weights/best.onnx -o /content/drive/MyDrive/ObjectDetection/yolov7/tfmodel import tensorflow as tf with open('/content/drive/MyDrive/ObjectDetection/yolov7/yolov7_model.tflite', 'wb') as f: #adding metadata _LABEL_FILE = "/content/drive/MyDrive/ObjectDetection/yolov7/labels.txt" _INPUT_NORM_MEAN = 127.5 Create the metadata writer.writer = ObjectDetectorWriter.create_for_inference( Verify the metadata generated by metadata writer.print(writer.get_metadata_json()) Populate the metadata into the model.writer_utils.save_file(writer.populate(), _SAVE_TO_PATH) output:- /usr/local/lib/python3.10/dist-packages/tensorflow_lite_support/metadata/python/metadata.py:395: UserWarning: File, 'labels.txt', does not exist in the metadata. But packing it to tflite model is still allowed. |

|

@VishalShinde16 hello, It seems that you are encountering an issue with the TensorFlow Object Detection Metadata Writer when adding metadata to your converted .tflite model. Specifically, you mentioned that in the "output_tensor_metadata" section, only the "location" is shown instead of "category," "score," and "location". To address this issue, one potential solution is to check your label file, "labels.txt", to ensure that it exists and is correctly formatted. The warning message you received indicates that the file might not exist, but it is still allowed to pack it into the .tflite model. Please verify that the "labels.txt" file is present and correctly specifies the categories for your objects. Ensure that each category is listed on a separate line in the file. Once you have confirmed the presence and correctness of the label file, rerun the process of adding metadata to your model using the TensorFlow Object Detection Metadata Writer. This should ensure that the metadata properly includes the "category" and "score" information along with the "location." I hope this helps! Let me know if you have any further questions or concerns. |

|

@glenn-jocher hello sir, I would like to ask how to transform the .pt file from my custom training in google colab to tflite? Should I also run it in Google colab to convert, or is it okay to run it in my windows local computer? Thank you. |

|

@rochellemadulara hello! 😊 You can convert the For conversion, ensure you have TensorFlow installed. Here's a brief example of how you might do it in Python: import torch

# Load your trained model

model = torch.load('your_model.pt')

# Convert to ONNX format first

torch.onnx.export(model, dummy_input, "model.onnx", ...)

# Then convert ONNX to TensorFlow and finally to TFLite

# Note: You might need additional libraries and steps, refer to the TensorFlow documentation for detailed steps.Remember to adjust paths and parameters as necessary. If you're doing this on your local computer, make sure you have the required environment set up, similar to what you used in Colab. Hope this helps! If you have further questions, feel free to ask. |

|

Hello again, sir. when I run my code these are the errors 2024-04-05 22:49:02.875687: I tensorflow/core/util/port.cc:113] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable WARNING:tensorflow:From C:\Users\user\anaconda3New\envs\yolov5\Lib\site-packages\tensorflow_probability\python\internal\backend\numpy_utils.py:48: The name tf.logging.TaskLevelStatusMessage is deprecated. Please use tf.compat.v1.logging.TaskLevelStatusMessage instead. WARNING:tensorflow:From C:\Users\user\anaconda3New\envs\yolov5\Lib\site-packages\tensorflow_probability\python\internal\backend\numpy_utils.py:48: The name tf.control_flow_v2_enabled is deprecated. Please use tf.compat.v1.control_flow_v2_enabled instead. C:\Users\user\anaconda3New\envs\yolov5\Lib\site-packages\tensorflow_addons\utils\tfa_eol_msg.py:23: UserWarning: TensorFlow Addons (TFA) has ended development and introduction of new features. For more information see: tensorflow/addons#2807 warnings.warn( These are the list of my pip for keras and tensorflow |

|

Hello @rochellemadulara, It looks like you're experiencing compatibility issues between different TensorFlow and add-on versions. Specifically, the error at the end ( Firstly, TensorFlow Addons is not compatible with TensorFlow 2.16.1 as per the warning you received. You have a couple of options to solve this issue:

Ensure your environment is activated when running these commands to apply changes to the correct Python environment. After correcting the version mismatch, your code should run without the stated error. Unfortunately, if TFA does not support TensorFlow 2.16.1 yet, you might need to stick with option 1. Hope this helps resolve the issue! If you encounter further errors, feel free to ask. |

Hi @glenn-jocher I want to convert PyTorch weights to tflite how can I do that?

The text was updated successfully, but these errors were encountered: