GPU RAM requirements for training #5777

-

|

How much GPU RAM is needed to train yolov5 s, m, l, x models with default parameters? I want to understand whether I'll be able to train a high quality model on my laptop (RTX 3050, 4GB) or desktop (RTX 3060, 12GB) or it's way too little and I need to look for something more exotic. Similar RAM question for inference. Thanks! |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 7 replies

-

|

@DSA101 👋 Hello! Thanks for asking about CUDA memory issues. YOLOv5 🚀 can be trained on CPU, single-GPU, or multi-GPU. When training on GPU it is important to keep your batch-size small enough that you do not use all of your GPU memory, otherwise you will see a CUDA Out Of Memory (OOM) Error and your training will crash. You can observe your CUDA memory utilization using either the CUDA Out of Memory SolutionsIf you encounter a CUDA OOM error, the steps you can take to reduce your memory usage are:

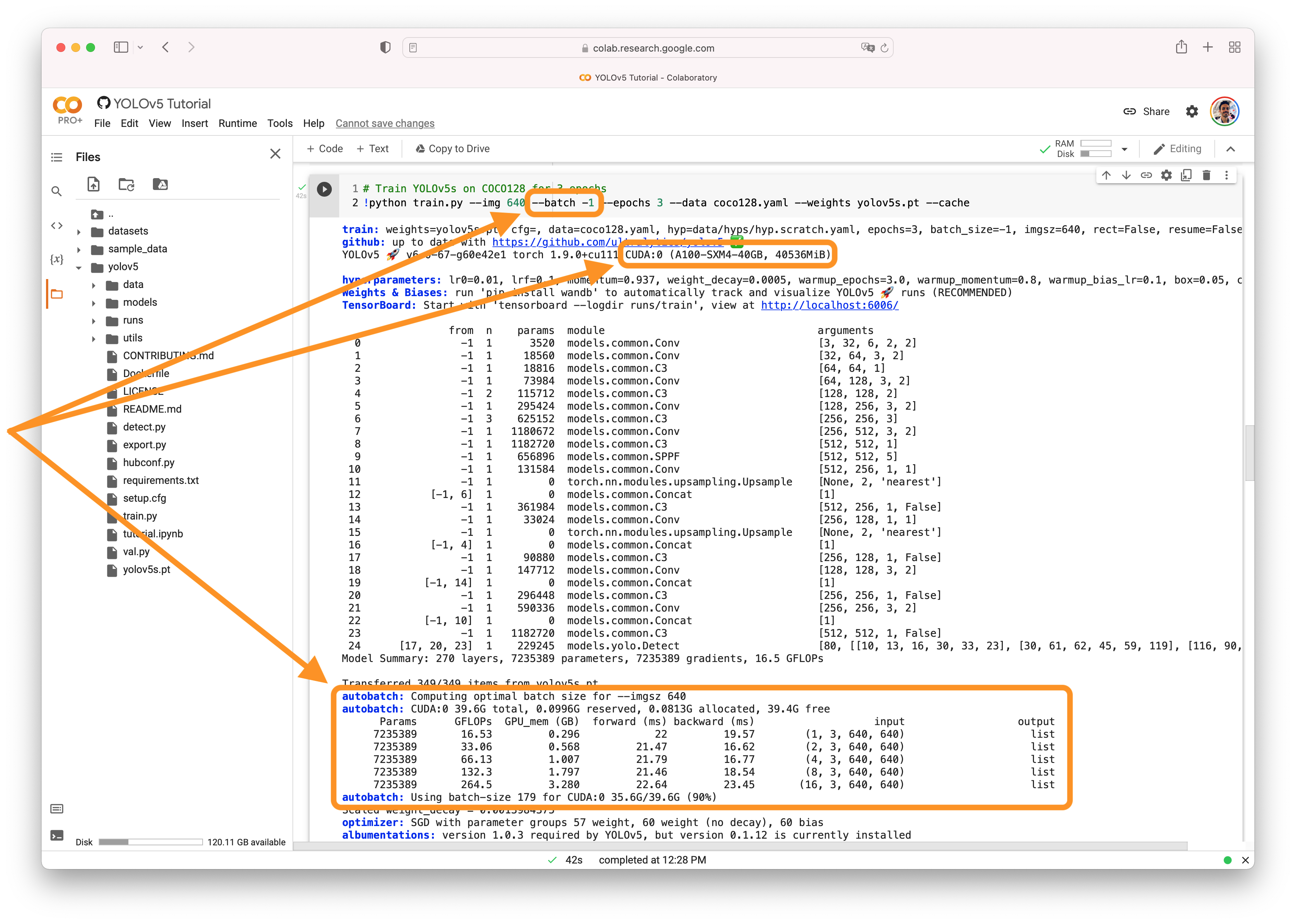

AutoBatchYou can use YOLOv5 AutoBatch (NEW) to find the best batch size for your training by passing Good luck and let us know if you have any other questions! |

Beta Was this translation helpful? Give feedback.

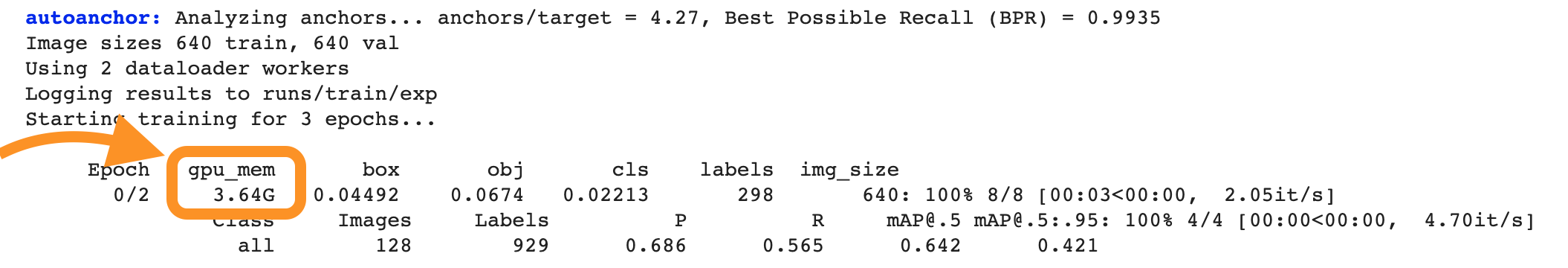

@DSA101 👋 Hello! Thanks for asking about CUDA memory issues. YOLOv5 🚀 can be trained on CPU, single-GPU, or multi-GPU. When training on GPU it is important to keep your batch-size small enough that you do not use all of your GPU memory, otherwise you will see a CUDA Out Of Memory (OOM) Error and your training will crash. You can observe your CUDA memory utilization using either the

nvidia-smicommand or by viewing your console output:CUDA Out of Memory Solutions

If you encounter a CUDA OOM error, the steps you can take to reduce your memory usage are:

--batch-size--img-size