This code provides an implementation of the research paper in python using Tensorflow:

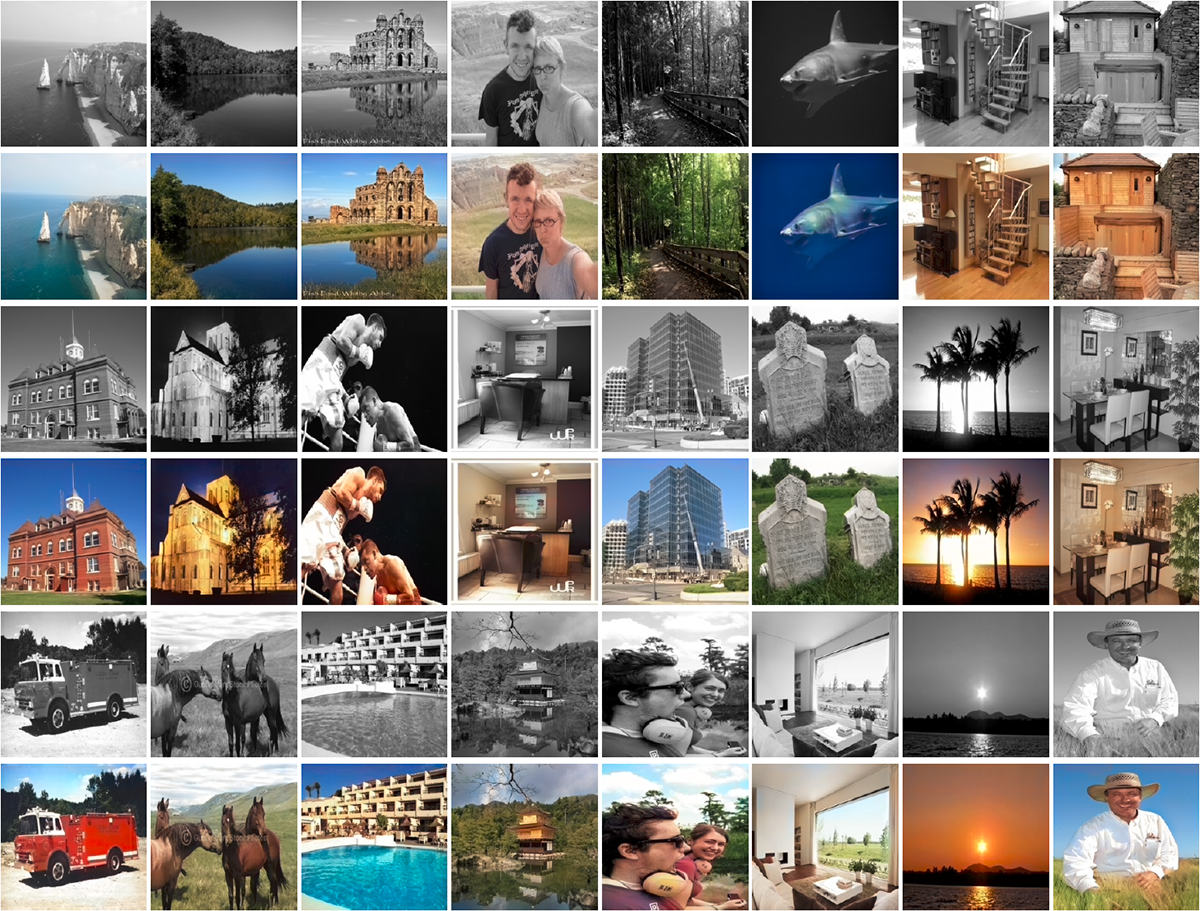

"Let there be Color!: Joint End-to-end Learning of Global and Local Image Priors for Automatic Image Colorization with Simultaneous Classification"

Satoshi Iizuka, Edgar Simo-Serra, and Hiroshi Ishikawa

ACM Transaction on Graphics (Proc. of SIGGRAPH 2016), 2016

This paper provides a method of automatically coloring a grayscale images with a deep network. The network learns both local features and global features jointly in a single framework which can then be used on images of any resolution. By incorporating global features it is able to obtain realistic colorings with our model.

See the project page for more detailed information.

The Deep netwotk used can be divided into the following components:

It is a 6 layer convolutional neural network which obtains low-level features from the input image, which is fed to both the mid-level features features network as well as global features network.

Global image features are obtained by further processing the low-level features with four convolutional layers followed by three fully connected layer.

The low-level features are processed with two convolutional layers to obtain the mid-level features.

The global features are concatenated with the local features at each spatial location and processed with a small one layer network.

The fused features are processed by a set of convolutional & upsampling layers. The output layer is a convolutional layer with sigmoid transfer function that outputs the chrominance of the grayscale image.

The architecture details are as shown below:

The components are all trained in an end-to-end fashion. The chrominance is fused with the luminance to form the out

Following are the steps followed while training the network:

- Convert the colour image into grayscale and

CIEL*a*b*colourspace. - Pass the grayscale image as input to the model.

- Compute the MSE between target output and the output of the colorization network.

- Backpropogate the loss through all the networks(global features, mid-level features & low-level features) to update all the parameters of the model.

DATASET : Folder containing the train and test dataset

SOURCE : Folder containing the source code.

- config.py, several model related configurations.

- data.py, class to load the dataset.

- model.py, class for model operations.

- neural_network.py, class of various layers used in the model.

- main.py, driver program.