- Why-So-Deep: Towards Boosting Previously Trained Models for Visual Place Recognition (RA-L 2022) [Arxiv] | [project website] | [Bibtex]

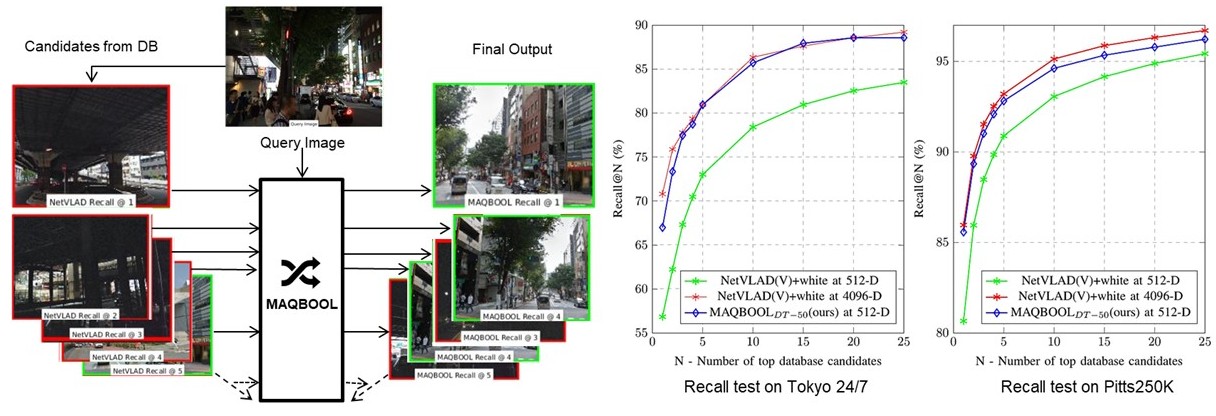

- Method (Ours): MAQBOOL: Multiple AcuQitation of perceptiBle regiOns for priOr Learning

Please refer to INSTALL.md for installation and dataset preparation.

To reproduce the results in papers, you could train and test the models following the instruction in REPRODUCTION.md.

MAQBOOL performance comparison with APANet and NetVLAD at 512-D.

| Method | Whitening | Tokyo 24/7 | Pitts250k-test | ||||

| Recall@1 | Recall@5 | Recall@10 | Recall@1 | Recall@5 | Recall@10 | ||

| Sum pooling | PCA whitening | 44.76 | 60.95 | 70.16 | 74.13 | 86.44 | 90.18 |

| PCA-pw | 52.7 | 67.3 | 73.02 | 75.63 | 88.01 | 91.75 | |

| NetVLAD | PCA whitening | 56.83 | 73.02 | 78.41 | 80.66 | 90.88 | 93.06 |

| PCA-pw | 58.73 | 74.6 | 80.32 | 81.95 | 91.65 | 93.76 | |

| APANet | PCA whitening | 61.9 | 77.78 | 80.95 | 82.32 | 90.92 | 93.79 |

| PCA-pw | 66.98 | 80.95 | 83.81 | 83.65 | 92.56 | 94.7 | |

| MAQBOOL (ours) | PCA whitening + DT-50 | 68.25 | 79.37 | 83.49 | 85.45 | 92.62 | 94.58 |

| PCA whitening + DT-100 | 69.21 | 80.32 | 84.44 | 85.46 | 92.77 | 94.72 | |

If you want to add MAQBOOL results, tested on Pittsburgh and Tokyo247 dataset, in comparison with your work at 4096-D and 512-D. You can use the dat files in the results folder.

Names of these dat files are explained in our project page, so that you can easily use.

Furthermore, if you need help in plotting the results using Tikz and latex, please follow this little tutorial.

Thumbnails generating for top 5 results

Open config_wsd.m and change show_output = 1.

If you find this repo useful for your research, please consider citing the paper

@article{whysodeepBhutta22,

author={Bhutta, M. Usman Maqbool and Sun, Yuxiang and Lau, Darwin and Liu, Ming},

journal={IEEE Robotics and Automation Letters},

title={Why-So-Deep: Towards Boosting Previously Trained Models for Visual Place Recognition},

year={2022},

volume={7},

number={2},

pages={1824-1831},

doi={10.1109/LRA.2022.3142741}}The structure of this repo is inspired by NetVLAD.

Documentation is available at project website. Please follow the installation guide below.

Why-So-Deep is released under the MIT license.