-

-

Notifications

You must be signed in to change notification settings - Fork 15.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

yolov5 FPS boost #10496

Comments

|

Hi, I'm not sure in terms of what the setup/requirements are etc... But I always run my inferences through C++ by using the OpenCV's dnn module that was built with CUDA and CuDNN. I'm using an NVidia Quadro RTX 4000. Perhaps one way for you might be to use a NCS2? Again hard to make a recommendation without knowing the full context... Hope any of this helps you at all, good luck! 🚀 |

|

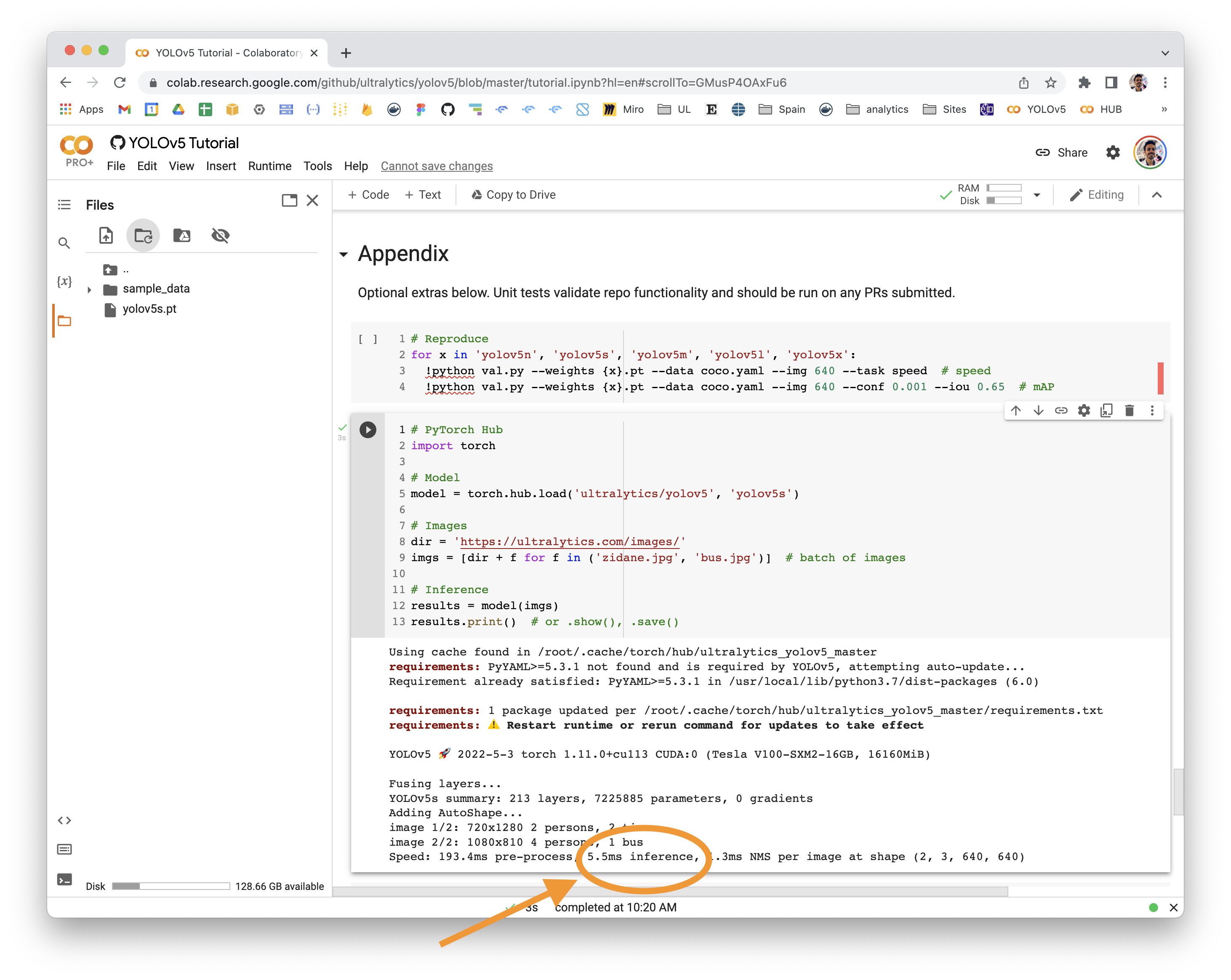

@ozlematiz 👋 Hello! Thanks for asking about inference speed issues. PyTorch Hub speeds will vary by hardware, software, model, inference settings, etc. Our default example in Colab with a V100 looks like this: YOLOv5 🚀 can be run on CPU (i.e. detect.py inferencepython detect.py --weights yolov5s.pt --img 640 --conf 0.25 --source data/images/YOLOv5 PyTorch Hub inferenceimport torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Images

dir = 'https://ultralytics.com/images/'

imgs = [dir + f for f in ('zidane.jpg', 'bus.jpg')] # batch of images

# Inference

results = model(imgs)

results.print() # or .show(), .save()

# Speed: 631.5ms pre-process, 19.2ms inference, 1.6ms NMS per image at shape (2, 3, 640, 640)Increase SpeedsIf you would like to increase your inference speed some options are:

Good luck 🍀 and let us know if you have any other questions! |

|

👋 Hello, this issue has been automatically marked as stale because it has not had recent activity. Please note it will be closed if no further activity occurs. Access additional YOLOv5 🚀 resources:

Access additional Ultralytics ⚡ resources:

Feel free to inform us of any other issues you discover or feature requests that come to mind in the future. Pull Requests (PRs) are also always welcomed! Thank you for your contributions to YOLOv5 🚀 and Vision AI ⭐! |

Search before asking

Question

Hello there. I trained my own custom dataset using yolov5s. I developed a license plate recognition system. I am using python's Flask web framework in the web environment. While the FPS value is 5 on the USB camera, I get 1 FPS when I run the project on ip webcam and mp4 files. Can you help me increase the FPS value?

Thank you @jkocherhans @adrianholovaty @cgerum @farleylai @glenn-jocher @Nioolek

while the project is running https://vimeo.com/781019379

Additional

No response

The text was updated successfully, but these errors were encountered: