-

-

Notifications

You must be signed in to change notification settings - Fork 3.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

When multi-GPU training, my validation map value is very low. #1436

Comments

|

@buaazsj there are a few issues open regarding --resume that talk about this, you might want to search the issues a bit. |

|

Is there any update on this issue? I've been facing the same problem, low mAP when training on 2/4 gpu setting. Training on 1-gpu works perfectly fine. Edit. when training in a multi-gpu setting, the training loss (gIOU, cls, and obj) are the same as 1-gpu setting. Its only the validation loss + mAP that is reduced. Edit 2. Ok so this is weird. Evaluation using :func: test.test during training on multi-gpu gives low mAP. BUT, if you separately evaluate using the checkpoint that was saved to disk by running python test.py .... , for the same epoch, you get correct mAP! By correct, I mean mAP is similar to training with 1-gpu setting. I'm testing this on yolov3-tiny.cfg. Will report more when training finishes in 1-2 days. |

|

@akshaychawla hello, thank you for your interest in our work! This issue seems to lack the minimum requirements for a proper response, or is insufficiently detailed for us to help you. Please note that most technical problems are due to:

sudo rm -rf yolov5 # remove existing

git clone https://github.com/ultralytics/yolov5 && cd yolov5 # clone latest

python detect.py # verify detection

# CODE TO REPRODUCE YOUR ISSUE HERE

If none of these apply to you, we suggest you close this issue and raise a new one using the Bug Report template, providing screenshots and minimum viable code to reproduce your issue. Thank you! RequirementsPython 3.8 or later with all requirements.txt dependencies installed, including $ pip install -r requirements.txtEnvironmentsYOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

StatusIf this badge is green, all YOLOv5 GitHub Actions Continuous Integration (CI) tests are passing. These tests evaluate proper operation of basic YOLOv5 functionality, including training (train.py), testing (test.py), inference (detect.py) and export (export.py) on MacOS, Windows, and Ubuntu. |

I met the same question. Have you solved it? |

|

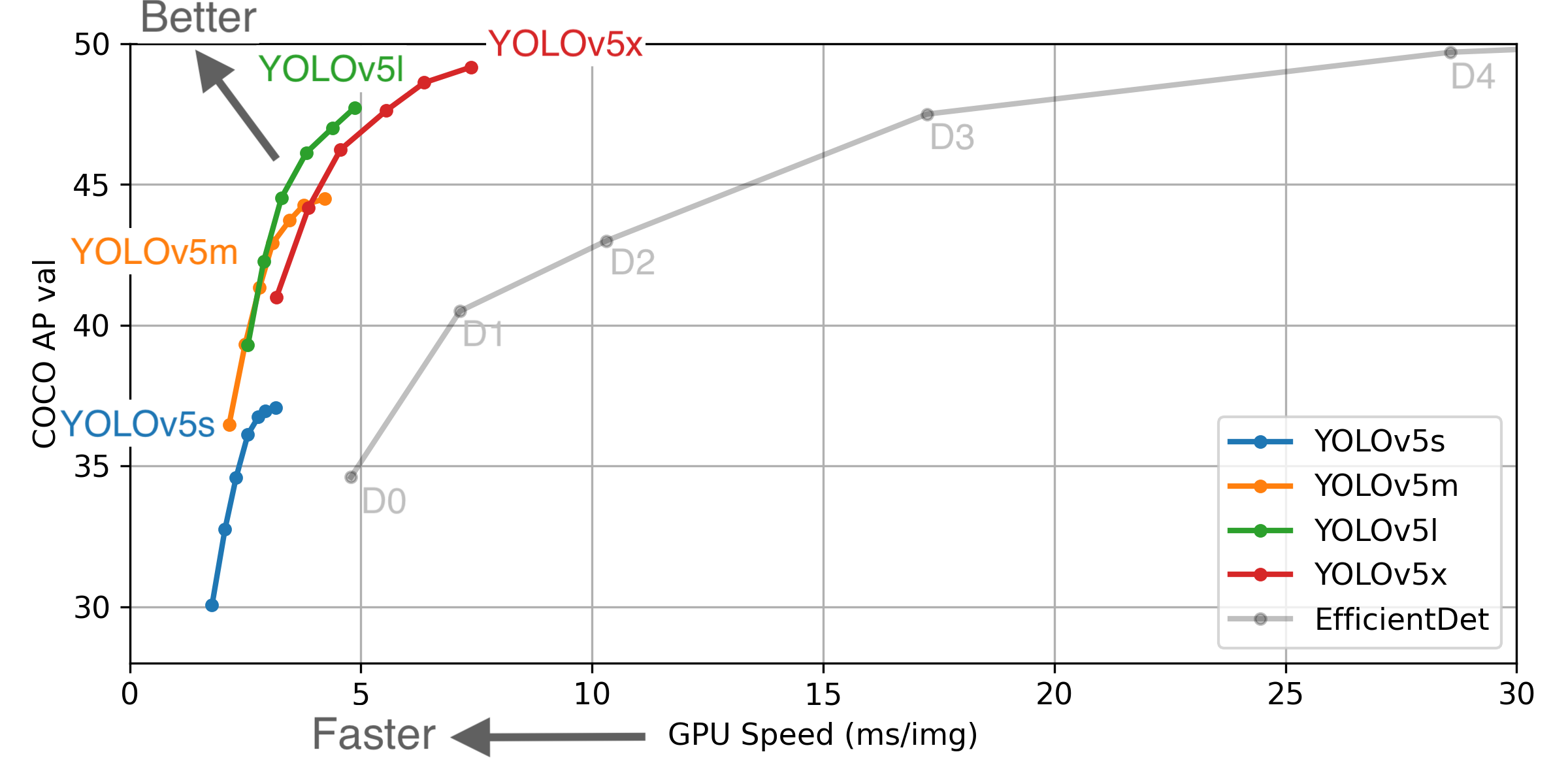

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

Pretrained Checkpoints

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy. For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you! |

|

Here are the results: issue When training with multiple GPUs on DDP, validation mAP is lower than expected. observation If we test a serialized version of the ddp model (i.e serialize to disk using torch.save and then serialize using torch.load), the validation mAP is fine. fix During training instead of running test.test using the model that is being currently trained using DDP, write a temporary checkpoint to disk and tell test to create a new model and load that checkpoint and run validation with it. Why does it work? No idea. Just dumb luck I guess. If I had to guess, it looks like when we move model.module.yolo_layers to model.yolo_layers after DDP init, and then switch to eval using model.eval(), it may not be switching eval on for the yolo_layers since they're outside model.module. OR, it might have something to do with the test dataloader. The minor changes required for train.py & test.py can be viewed here: akshaychawla@4e5eaab Another observation is that when I increased batch-size to 128 and train the model, I was expecting it to perform worse because higher batch size means less gradient updates which means less performance. BUT, this repository has support for gradient accumulation using loss *= batchsize / 64 which I think was initially designed for cases where batchsize < 64. But for bs>64, it has the effect of scaling up the loss, which is same as scaling the learning rate hyp['lr0'] to accommodate higher batch-sizes. But more importantly, it speed up training.

Logs, results and checkpoints available at: Edit. https://drive.google.com/drive/folders/11CN50wq-e0COr9RnWPtBHgj8z-AfFBq6?usp=sharing |

|

@akshaychawla thanks so much for the detailed analysis! It looks like you've put in a lot of work and arrived at a very useful insight. I would highly recommend you try YOLOv5, it has a multitude of feature additions, improvements and bug fixes above and beyond this YOLOv3 repo. DDP is functioning correctly there, we use for training the largest official YOLOv5x model with no problems. |

|

@glenn-jocher Thank YOU for building, open-sourcing and then maintaining this and yolov5 repository! this is a very well written piece of software and I have learnt so much from it. Would love to transition to Yolov5, but reviewer 3 will ask me to compare to "existing state of the art" before a borderline reject, so my arms are tied to v3 for now 🤷♂️ |

|

@glenn-jocher |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

@goldwater668 It's great to hear about the comparison! YOLOv3 in the YOLOv5 repository features an updated architecture that may outperform YOLOv5 in certain scenarios. YOLOv5, however, offers a significant number of improvements and optimizations over YOLOv3. I would recommend reviewing the recent updates and optimizations in YOLOv5 to ensure an Apple's-to-Apple's comparison. Thank you! |

❔Question

When multi-GPU training, my validation map value is very low.

But when the training is interrupted and then resumed, it becomes normal. Why?

Additional context

The text was updated successfully, but these errors were encountered: