-

-

Notifications

You must be signed in to change notification settings - Fork 3.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

CUDA out of memeory,I set batch_size 1,it does not work #1381

Comments

|

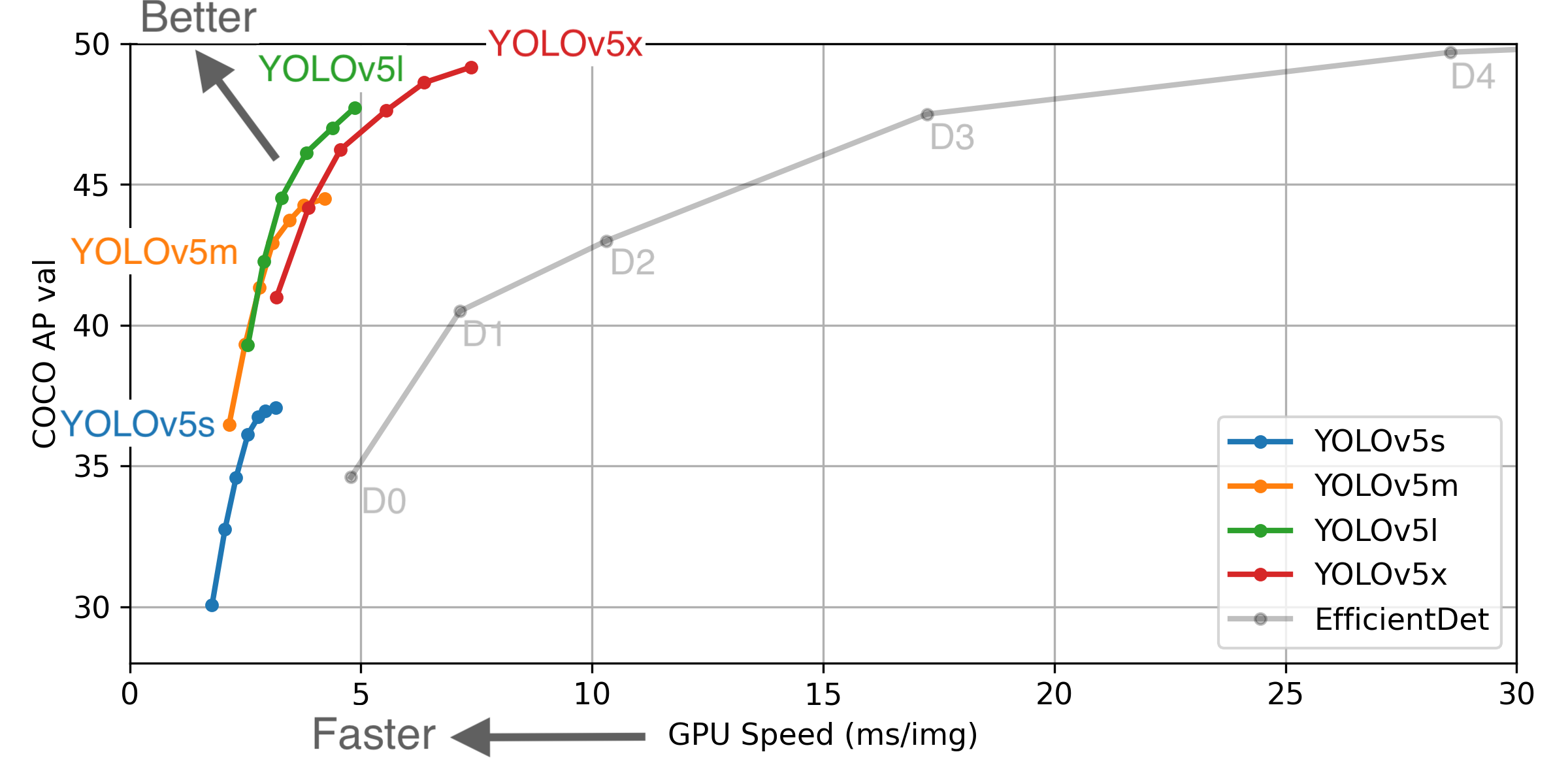

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

Pretrained Checkpoints

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy. For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you! |

|

Try decreasing image size by adding: --img 512 or 416, 320. |

|

I have the same issue and could not solve it by decreasing the image size. |

|

emm, I get the same problem. |

|

I had same problem with my RTX 2070 (about 8 Gb VRAM). Changed my command to: |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

@adodd202 glad to hear it's working for you now! It's common for the |

❔Question

c,I set batch_size 1,it does not work,My system is ubuntu 18.04 GTX1050,4GB Memory size.I set cfg images size 224,batch_size 1,when I try my custom dataset(it is coco dataset in face),like this

python train.py --batch_size 1

error occured:

CUDA out of memeory,

I think this project must have bug,it can not deal with memeory very well

Additional context

The text was updated successfully, but these errors were encountered: