-

-

Notifications

You must be signed in to change notification settings - Fork 3.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

SINGLE-CLASS TRAINING EXAMPLE #102

Comments

|

This is a great thing! However scrolling over the code, I was able to figure these things out even before, but I would suggest You also explaining or showing what kind of modifications are required for the |

|

@tamasbalassa yes good idea. All done! |

|

To be complete, don't forget to add the modifications of the class numbers ( |

|

@glenn-jocher I have a question regarding your normalized labels. I have no problem with w and h, but how did you get the normalized x and y? Take as example an object from one image (image_id: 574200; COCO_val2014_000000574200.txt; category_id: 13) in the COCO validation set-- Relevant information from instances_val2014.json: {"license": 6, "file_name": "COCO_val2014_000000574200.jpg", "coco_url": "http://mscoco.org/images/574200", "height": 427, "width": 640, "date_captured": "2013-11-16 18:56:50", "flickr_url": "http://farm5.staticflickr.com/4018/4527879508_8f69659291_z.jpg", "id": 574200} {"segmentation": [[239.27, 139.42, 236.98, 140.04, 236.35, 159.03, 237.19, 171.97, 241.15, 186.58, 243.24, 186.37, 244.91, 165.5, 242.61, 149.02, 240.11, 140.04]], "area": 277.9082500000005, "iscrowd": 0, "image_id": 574200, "bbox": [236.35, 139.42, 8.56, 47.16], "category_id": 13, "id": 1388638} I assume that the width/height of this image are respectively 640 and 427, and the raw COCO xywh is [236.35, 139.42, 8.56, 47.16]. The corresponding normalized coordinates in your labels, as I see, are: 11 0.375984 0.381733 0.013375 0.110445 While 0.013375=8.56/640 and 0.110445=47.16/427, I don't know how the normalized x and y, i.e., 0.375984 and 0.381733 were obtained. Could you please elaborate on this point? Many thanks! |

|

@graftedlife according to http://cocodataset.org/#format-data, the COCO "bbox" is already in xywh, so to transform this to darknet format we should just need to divide by the image width and height. If we divide x and w by the image width 640, and y and h by the image height 427 we get: Which does not match our darknet labels. So the COCO xy coordinates must then represent the bottom corner point of the bounding box rather than its center. If we correct for this offset then the results match. And in the COCO link above you can see it says "box coordinates are measured from the top left image corner". |

|

@glenn-jocher |

|

@cy0616 can you run a line profiler to find the slow areas? Yes, NMS is very slow, it is not run during training, only at inference time. COCO validation takes about 2-3 minutes for 5000 images on a P100. If you see similar time ranges on COCO training then the problem is specific to your dataset. If you find ways to speed up the code, PR’s are welcome! |

|

@graftedlife I've just read your question this morning, so quickly implemented a code to test out both coco and kitti type of data. This is not for converting, only for testing the bounding box coordinates if they are correct or not. PS: it's just a quick solution, not extensively tested. The aim was to be easy to understand for everyone. |

|

Hi, I have a question about using the validation set. In my opinion, we should use a validation set to decide the performance of the training model. But I have found we only use the training set to make a decision. Thus, I suggest you add the validation set in the train.py. |

|

@JacksonLY the validation set is already used during training to compute mAP after each epoch. This is done by calling test.py to evaluate Lines 169 to 171 in eb6a4b5

|

Thanks for your reply, I have understood this flow. I found the many people need training customized model with pre-trained model from coco. Thus, I added some code to the train.py (line: 70~90) as follows: I have tested this code on retraining the customized dataset and hope this can helpful. |

|

when train this single-class model,the question is : I found my training model is a little faster( ~13 fps) than original yolov3-tiny model? |

|

@glenn-jocher Hi bro, thanks for your awesome work. |

|

@WannaSeaU yes this is expected. If there is only a single class, how can the network guess the wrong class? Imagine if I asked you to guess a random number between zero and zero... you'd probably be correct every time as well no? |

|

@glenn-jocher Thank you for replying to such a basic question. |

|

@glenn-jocher hi, as the following picture shows, if I train one class and a picture dosen't have object in it, so i don't need create a label file of this picture or i should create a label file which contains nothing? |

|

@XiaoJiNu if there are not objects in your training image you don't need to supply a label file. Empty may work as well, try it out. |

|

thank you @glenn-jocher , i will try it |

|

@glenn-jocher Hi, I was following your tutorial and it works great and also Thanks for the tutorial I have several questions regarding training with our own dataset :

and also if I would like to have 5 classes to train is this mean im going to have where each of them has pics for training purposes? |

|

@Jriandono you're welcome! Regarding your question, no this is not correct. You only use one *.txt file for your training set and one *.txt file for your test set (they can be the same, as in the demo). This is the same no matter now many classes you have. For multiple class training on custom data please see https://docs.ultralytics.com/yolov5/tutorials/train_custom_data |

|

Got another question. You mentioned that you created a txt file from first 10 images of coco and use it for both training and testing. I only have one txt file for images should I duplicate it as test as well. I didn’t understand what you mean by that. Did you split data first and then make txt file for them or you use one txt file for testing and training. |

|

Hi Glenn, regarding normalizing x and y of center coordinates. The way that you explained to @graftedlife should be applied for custom data as well? center coordinates should be first multiplied by width and hight and then be divided by image size? or is different for custom data and this is only for coco dataset |

|

@sanazss all data is handles identically by the repo. All data must be in the darknet format. The coco dataset you use for the tutorials is provided in darknet format. |

|

Hi Guys I just need some help, I am training with a single class with my custom data. Perfectly followed the example, but am experiencing the following. I have changed the classes to "1", on the file.data and yolo3.cfg |

|

@sip-ops your data has class numbers that exceed 0. If you are training a single class model then all the classes must be 0. |

|

@glenn-jocher you are absolutely correct, I had to change it in all labels, thanks it works now. |

|

@yangxu351 I don't recall exactly, but the COCO breakdown is 120k training images and 5k validation images, so I'd use similar proportions in xview (i.e. 95%-5%). |

|

Thank You for this great guide! I seem to have a problem with I think paths here. What am I doing wrong? I work in Jupyter with Pytorch on Windows 10. Thnx a lot in advance for taking a look! RuntimeError Traceback (most recent call last) coco.data: coco.names: yolov3.cfg: (changes) data has the structure data/artifacts/images (dir), labels (dir), train.txt, val.txt the params are: The dataloader delivers with (if tested just before the training loop) Thnx a lot for any suggestion! |

|

@Andy7775 you might want to start from a working environment, like a colab instance and inspect the paths there for the tutorial *.data files. If you are doing single class, you can use yolov3-spp-1cls.cfg. Note I've updated the tutorial to reflect the latest code for reproducing our results. Run those lines and start from there. |

|

Thnx, I got it. |

|

@Andy7775 great! |

|

@claire-0702 you likely have no labels in your test set. Start from the tutorial. |

|

@glenn-jocher hi,I have changed my dataset about train.txt and test.txt. the images and the lables were seted as same as your tutorial.the figure one shows my dataset. |

|

@claire-0702 upload your train_batch0.png and test_batch0.png images here please. |

|

@glenn-jocher train_batch0.png and test_batch0.png |

|

@glenn-jocher I have the same problem just like @claire-0702, the values of P, R, GIoU loss, cls_loss are always zero, the following images are

I follow your tutorial https://github.com/ultralytics/yolov3/wiki/Example:-Train-Single-Class, run the command down below to strat training process eventually, and I use the weight until 100 Epoch to test my data, but there is no result in the output images. How can I fix it? |

|

@AndyTaiwan if GIoU loss is zero then no anchors are above the iou threshold. You can reduce the iou threshold here: Line 31 in 9ea8562

You should also replace the default anchors in the cfg with kmeans anchors for your specific dataset. @claire-0702 this may help you as well: Lines 691 to 696 in 9ea8562

Also, since the repo is updated often, |

|

I have same problem. After adding a lot more data, the gIOU is now non-zero, but the Cls is still all 0. What can cause this issue? |

|

From the visual results you posted above, I just realized that the Cls loss, P, R, mAP are all 0 if a single class cfg file is used. Is that what you intend to do? |

|

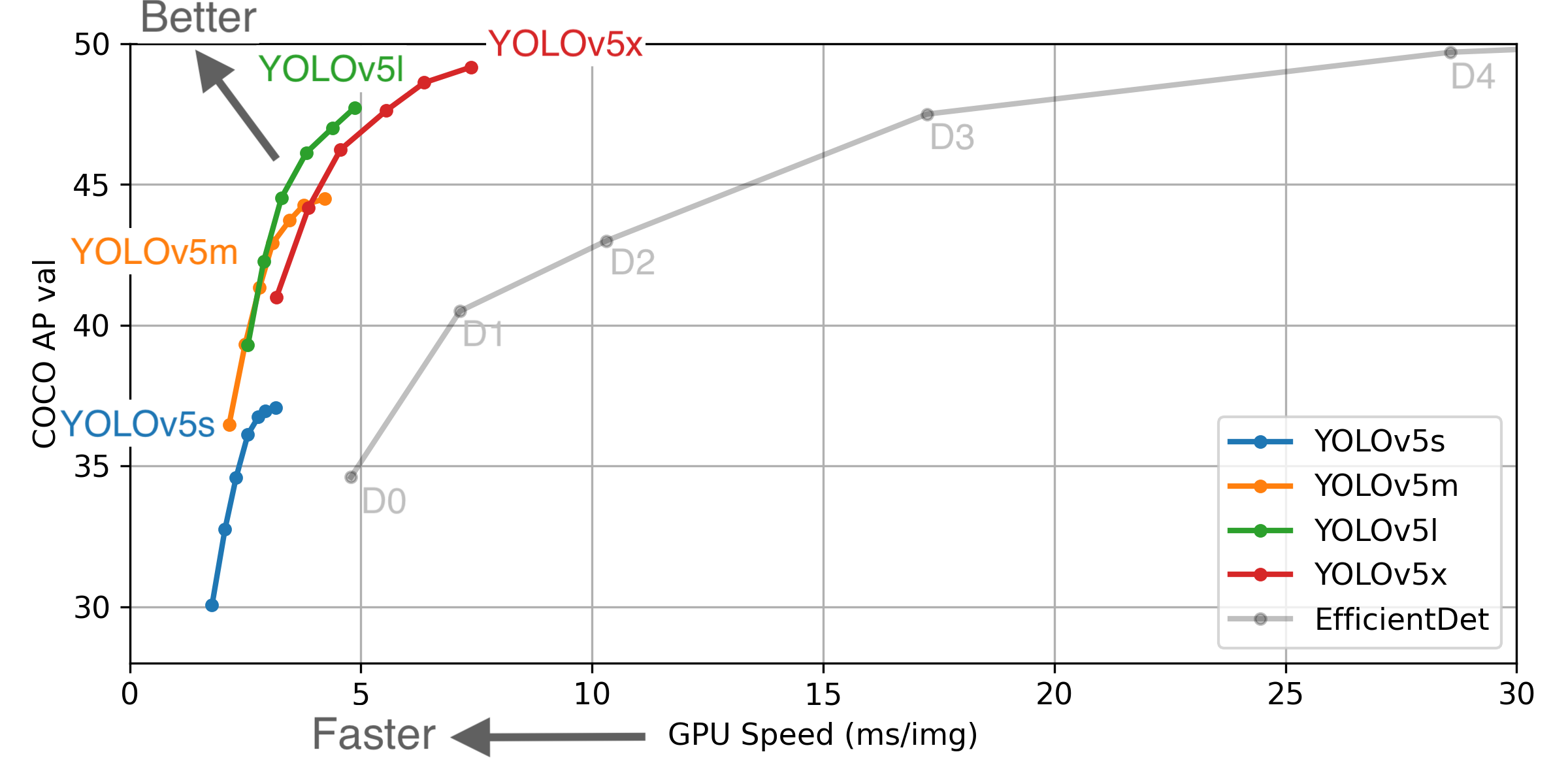

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

Pretrained Checkpoints

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy. For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you! |

|

hi, guy's. I got problem with single class detection with yolov3. I mean no problem while training it works well. but while detecting object from video. I got problem with class_number which is 1 in my case. if i change class_number to 2 or other number and update the obj.names to 2 or other list of object names it work. but for only one object it gives me below error: please any help? Traceback (most recent call last): |

|

|

Hey @glenn-jocher |

|

thank you guy's |

|

why i need to download coco dataset when i have to train it on my custom dataset. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

I have a question |

|

@soheilmajidi 👋 Hello! Thanks for asking about YOLOv3 🚀 dataset formatting. No changes are required to train single class. To train correctly your data must be in YOLOv5 format. Please see our Train Custom Data tutorial for full documentation on dataset setup and all steps required to start training your first model. A few excerpts from the tutorial: 1.1 Create dataset.yamlCOCO128 is an example small tutorial dataset composed of the first 128 images in COCO train2017. These same 128 images are used for both training and validation to verify our training pipeline is capable of overfitting. data/coco128.yaml, shown below, is the dataset config file that defines 1) the dataset root directory # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: ../datasets/coco128 # dataset root dir

train: images/train2017 # train images (relative to 'path') 128 images

val: images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes

nc: 80 # number of classes

names: [ 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush' ] # class names1.2 Create LabelsAfter using a tool like Roboflow Annotate to label your images, export your labels to YOLO format, with one

The label file corresponding to the above image contains 2 persons (class 1.3 Organize DirectoriesOrganize your train and val images and labels according to the example below. YOLOv5 assumes ../datasets/coco128/images/im0.jpg # image

../datasets/coco128/labels/im0.txt # labelGood luck 🍀 and let us know if you have any other questions! |

|

|

@koreanmarine hello! Great questions regarding training with YOLOv3:

Remember to maintain the same directory structure for images and labels, where the training script expects to find a Good luck with your training! 🚀 If you have any more questions, feel free to ask. |

This guide explains how to train your own single-class dataset with YOLOv3.

Before You Start

git clone https://github.com/ultralytics/yolov3bash yolov3/data/get_coco2017.shTrain On Custom Data

1. Label your data in Darknet format. After using a tool like Labelbox to label your images, you'll need to export your data to darknet format. Your data should follow the example created by

get_coco2017.sh, with images and labels in separate parallel folders, and one label file per image (if no objects in image, no label file is required). The label file specifications are:class x_center y_center width heightformat.x_centerandwidthby image width, andy_centerandheightby image height.Each image's label file must be locatable by simply replacing

/images/*.jpgwith/labels/*.txtin its pathname. An example image and label pair would be:An example label file with 4 persons (all class

0):2. Create train and test

*.txtfiles. Here we createdata/coco_1cls.txt, which contains 5 images with only persons from the coco 2014 trainval dataset. We will use this small dataset for both training and testing. Each row contains a path to an image, and remember one label must also exist in a corresponding/labelsfolder for each image that has targets.3. Create new

*.names filelisting all of the names for the classes in our dataset. Here we use the existingdata/coco.namesfile. Classes are zero indexed, sopersonis class0.4. Update

data/coco.datalines 2 and 3 to point to our new text file for training and validation (in your own data you would likely want to use separate train and test sets). Also update line 1 to our new class count, if not 80, and lastly update line 4 to point to our new*.namesfile, if you created one. Save the modified file asdata/coco_1cls.data.5. Update

*.cfgfile (optional). Each YOLO layer has 255 outputs: 85 outputs per anchor [4 box coordinates + 1 object confidence + 80 class confidences], times 3 anchors. If you use fewer classes, reduce filters tofilters=[4 + 1 + n] * 3, wherenis your class count. This modification should be made to the layer preceding each of the 3 YOLO layers. Also modifyclasses=80toclasses=nin each YOLO layer, wherenis your class count (for single class training,n=1).6. (OPTIONAL) Update hyperparameters such as LR, LR scheduler, optimizer, augmentation settings, multi_scale settings, etc in

train.pyfor your particular task. We recommend you start with all-default settings first updating anything.7. Train. Run

python3 train.py --data data/coco_1cls.datato train using your custom data. If you created a custom*.cfgfile as well, specify it using--cfg cfg/my_new_file.cfg.Visualize Results

Run

from utils import utils; utils.plot_results()to see your training losses and performance metrics vs epoch. If you don't see acceptable performance, try hyperparameter tuning and re-training. Multipleresults.txtfiles are overlaid automatically to compare performance.Here we see results from training on

coco_1cls.datausing the defaultyolov3-spp.cfgand also a single-classyolov3-spp-1cls.cfg, available in thedata/andcfg/folders.Evaluate your trained model: copy

COCO_val2014_000000001464.jpgtodata/samplesfolder and runpython3 detect.py --weights weights/last.ptReproduce Our Results

To reproduce this tutorial, simply run the following code. This trains all the various tutorials, saves each results*.txt file separately, and plots them together as

results.png. It all takes less than 30 minutes on a 2080Ti.Reproduce Our Environment

To access an up-to-date working environment (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled), consider a:

The text was updated successfully, but these errors were encountered: