http://rt.dgyblog.com/ref/ref-learning-deep-learning.html

https://github.com/leiwu1990/course.math_theory_nn

http://www.mit.edu/~9.520/fall18/

2018上海交通大学深度学习理论前沿研讨会 - 凌泽南的文章 - 知乎 https://zhuanlan.zhihu.com/p/40097048

https://www.researchgate.net/project/Theories-of-Deep-Learning

Deep Neural Networks and Partial Differential Equations: Approximation Theory and Structural Properties Philipp Petersen, University of Oxford

https://memento.epfl.ch/event/a-theoretical-analysis-of-machine-learning-and-par/

- http://at.yorku.ca/c/b/p/g/30.htm

- https://mat.univie.ac.at/~grohs/

- Topics course Mathematics of Deep Learning, NYU, Spring 18

- https://skymind.ai/ebook/Skymind_The_Math_Behind_Neural_Networks.pdf

- https://github.com/markovmodel/deeptime

- https://omar-florez.github.io/scratch_mlp/

- https://joanbruna.github.io/MathsDL-spring19/

- https://github.com/isikdogan/deep_learning_tutorials

- https://www.brown.edu/research/projects/crunch/machine-learning-x-seminars

- Deep Learning: Theory & Practice

- https://www.math.ias.edu/wtdl

- https://www.ml.tu-berlin.de/menue/mitglieder/klaus-robert_mueller/

- https://www-m15.ma.tum.de/Allgemeines/MathFounNN

- https://www.math.purdue.edu/~buzzard/MA598-Spring2019/index.shtml

- http://mathematics-in-europe.eu/?p=801

- Discrete Mathematics of Neural Networks: Selected Topics

- https://cims.nyu.edu/~bruna/

- https://www.math.ias.edu/wtdl

- https://www.pims.math.ca/scientific-event/190722-pcssdlcm

- Deep Learning for Image Analysis EMBL COURSE

- http://voigtlaender.xyz/

- http://www.mit.edu/~9.520/fall19/

[angewandtefunktionalanalysis]

- https://arxiv.org/pdf/1904.05657.pdf

- CS 584 / MATH 789R - Numerical Methods for Deep Learning

- http://www.mathcs.emory.edu/~lruthot/teaching.html

- Numerical methods for deep learning

- Short Course on Numerical Methods for Deep Learning

- https://www.math.ucla.edu/applied/cam

- http://www.mathcs.emory.edu/~lruthot/

- Automatic Differentiation of Parallelised Convolutional Neural Networks - Lessons from Adjoint PDE Solvers

- https://raoyongming.github.io/

- MA 721: Topics in Numerical Analysis: Deep Learning

- https://web.stanford.edu/~yplu/DynamicOCNN.pdf

- https://zhuanlan.zhihu.com/p/71747175

- https://web.stanford.edu/~yplu/

- https://web.stanford.edu/~yplu/project.html

- A Flexible Optimal Control Framework for Efficient Training of Deep Neural Networks

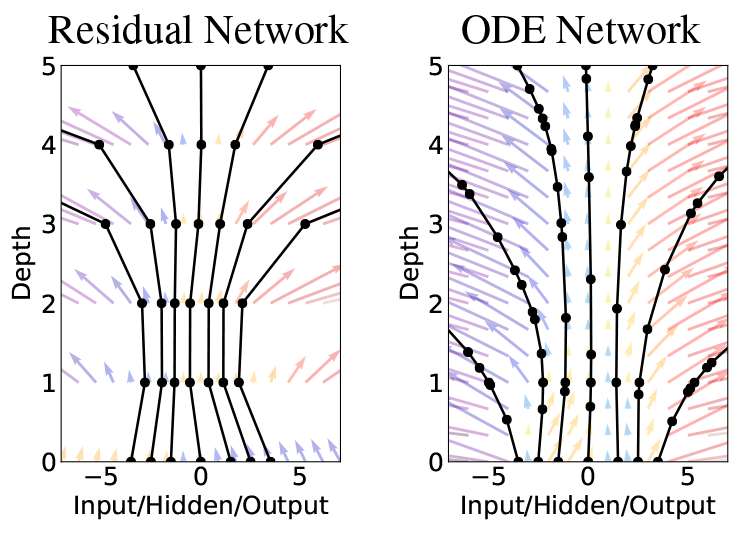

- NEURAL NETWORKS AS ORDINARY DIFFERENTIAL EQUATIONS

- BRIDGING DEEP NEURAL NETWORKS AND DIFFERENTIAL EQUATIONS FOR IMAGE ANALYSIS AND BEYOND

- Deep learning for universal linear embeddings of nonlinear dynamics

- Exact solutions to the nonlinear dynamics of learning in deep linear neural networks

- Neural Ordinary Differential Equations

- NeuPDE: Neural Network Based Ordinary and Partial Differential Equations for Modeling Time-Dependent Data

- Neural Ordinary Differential Equations and Adversarial Attacks

- Neural Dynamics and Computation Lab

Universal approximation theory show the expression power of deep neural network of some wide while shallow neural network.

- https://deeplearning-math.github.io/

- Deep Neural Network Approximation Theory

- Provable approximation properties for deep neural networks

- Deep Learning: Approximation of Functions by Composition

- DGD Approximation Theory Workshop

- https://arxiv.org/abs/1804.04272

- https://deepai.org/machine-learning/researcher/weinan-e

- https://deepxde.readthedocs.io/en/latest/

- https://github.com/IBM/pde-deep-learning

- https://github.com/ZichaoLong/PDE-Net

- https://github.com/amkatrutsa/DeepPDE

- https://github.com/maziarraissi/DeepHPMs

- https://maziarraissi.github.io/DeepHPMs/

- DGM: A deep learning algorithm for solving partial differential equations

- A Theoretical Analysis of Deep Neural Networks and Parametric PDEs

- NeuralNetDiffEq.jl: A Neural Network solver for ODEs

https://arxiv.org/abs/1806.07366 https://rkevingibson.github.io/blog/neural-networks-as-ordinary-differential-equations/

- http://cpaior2019.uowm.gr/

- https://github.com/tankconcordia/deep_inv_opt

- https://amds123.github.io/2019/01/13/Neumann-Networks-for-Inverse-Problems-in-Imaging/

- https://github.com/mughanibu/Deep-Learning-for-Inverse-Problems

- https://cv.snu.ac.kr/research/VDSR/

- https://arxiv.org/abs/1803.00092

- https://deep-inverse.org/

- https://earthscience.rice.edu/mathx2019/

- Deep Learning and Inverse Problem

- https://www.scec.org/publication/8768

Random matrix focus on the matrix, whose entities are sampled from some specific probability distribution. Weight matrices in deep nerual network are initialed in random. However, the model is over-parametered and it is hard to verify the role of one individual parameter.

- Recent Advances in Random Matrix Theory for Modern Machine Learning

- http://romaincouillet.hebfree.org/

- https://zhenyu-liao.github.io/

- https://dionisos.wp.imt.fr/

- Features extraction using random matrix theory

- Nonlinear random matrix theory for deep learning

- A RANDOM MATRIX APPROACH TO NEURAL NETWORKS

- Tensor Programs: A Swiss-Army Knife for Nonlinear Random Matrix Theory of Deep Learning and Beyond

- Scaling Limits of Wide Neural Networks with Weight Sharing: Gaussian Process Behavior, Gradient Independence, and Neural Tangent Kernel Derivation

http://www.vision.jhu.edu/tutorials/CVPR16-Tutorial-Math-Deep-Learning-Raja.pdf

- http://otml17.marcocuturi.net/

- https://www-obelix.irisa.fr/files/2017/01/postdoc-Obelix.pdf

- http://www.cis.jhu.edu/~rvidal/talks/learning/StructuredFactorizations.pdf

- http://cmsa.fas.harvard.edu/wp-content/uploads/2018/06/David_Gu_Harvard.pdf

- https://mc.ai/optimal-transport-theory-the-new-math-for-deep-learning/

- https://www.louisbachelier.org/wp-content/uploads/2017/07/170620-ilb-presentation-gabriel-peyre.pdf

- http://people.csail.mit.edu/davidam/

- https://www.birs.ca/events/2020/5-day-workshops/20w5126

- https://github.com/hindupuravinash/nips2017